This document demonstrates the disassembly of the water shader effect that makes use of a technique known as Environment Mapped Bump Mapping.

Level designer input:

- Environment maps (reflections for day, night, both under and above water)

- 2 Bump maps (bump maps for large and small waves)

- Diffuse map (very large scale color modification)

- Scale and movement speed of the 2 wave maps

High level game input:

- Day/night balance

- Animation time

Final output:

- Animated water with reflections that depend on the time of the day

A quick overview of how this specific implementation works:

- The engine's C++ code calculates the input data for the vertex shader by combining the parameters set by the level designer and the dynamic high level game input. It also pre-generates a combined environment map from the day/night factor on a regular interval.

- The vertex shader uses this input along with other input such as the world matrix and observer position, to calculate the on-screen positions of the vertices, as well as the uvs that the pixel shader will use for the four textures.

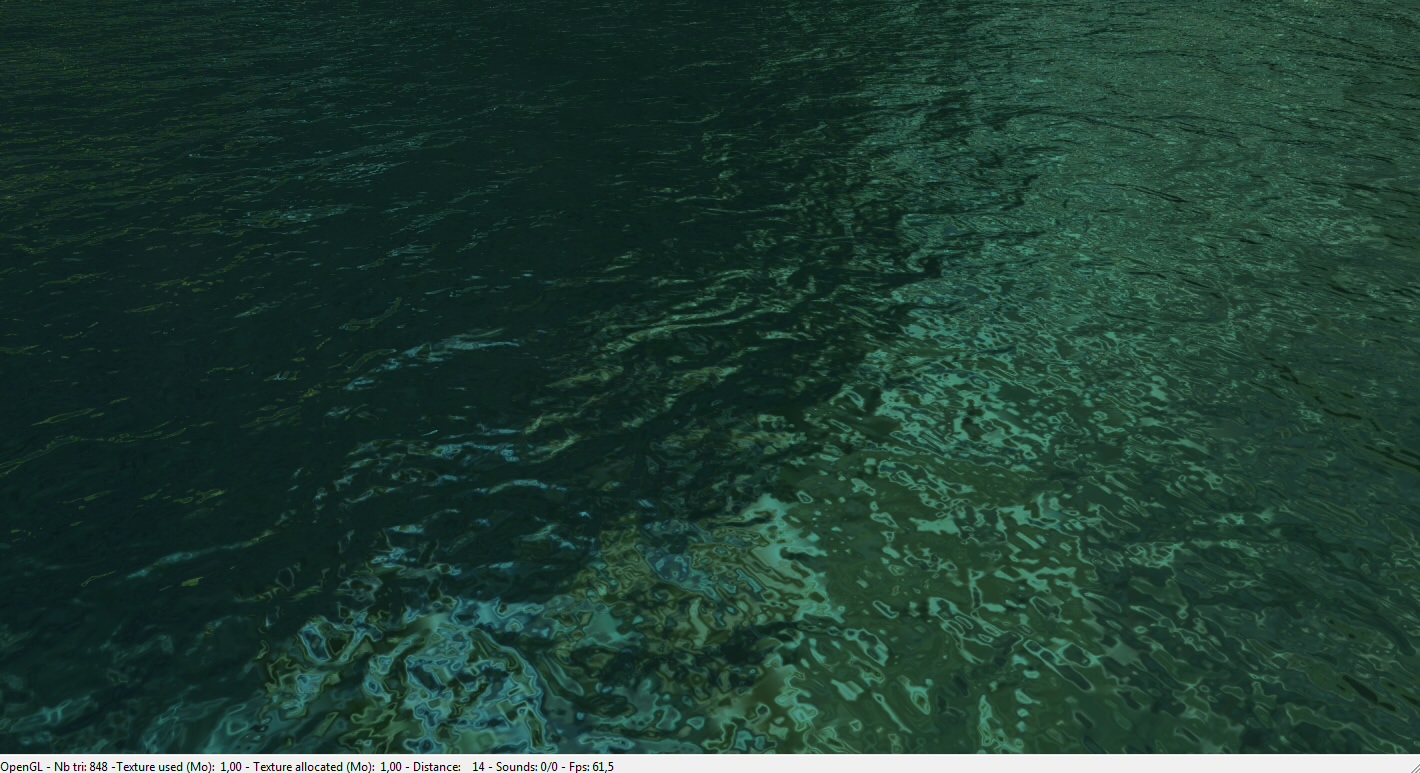

- Finally the pixel shader blends everything nicely together, and you get this:

¶ Disassembling the Vertex and Pixel Programs

¶ The Vertex Programs

For quick reference, this is the vertex program for Direct3D using Vertex Shader profile 1.0:

vertexshader water_vs_1_0 = asm

{

vs_1_0

dcl_position v0

m4x4 oPos, v0, c0 // transform vertex in view space

dp4 oFog, c4, v0; // setup fog

mul r3, v0, c5; // compute bump 0 uv's

add oT0, r3, c[6];

mul r3, v0, c7; // compute bump 1 uv's

add oT1, r3, c8;

add r0, c9, -v0; // r1 = eye - vertex

dp3 r1, r0, r0; // r1 = eye - vertex, r2 = (eye - vertex)^2

rsq r1, r1.x; // r1 = eye - vertex, r2 = 1/d(eye, vertex)

mul r0, r0, r1;

mad oT2, -r0, c10, c10; // envmap tex coord

dp4 oT3.x, v0, c11; // compute uv for diffuse texture

dp4 oT3.y, v0, c12;

};

And this is the one used with OpenGL using NV Vertex Program 1.0:

!!VP1.0

DP4 o[HPOS].x, c[0], v[0]; #transform vertex in view space

DP4 o[HPOS].y, c[1], v[0];

DP4 o[HPOS].z, c[2], v[0];

DP4 o[HPOS].w, c[3], v[0];

DP4 o[FOGC].x, c[4], v[0]; #setup fog

MUL R3, v[0], c[5]; #compute bump 0 uv's

ADD o[TEX0], R3, c[6];

MUL R3, v[0], c[7]; #compute bump 1 uv's

ADD o[TEX1], R3, c[8];

ADD R0, c[9], -v[0]; #r1 = eye - vertex

DP3 R1, R0, R0; #r1 = eye - vertex, r2 = (eye - vertex)^2

RSQ R1, R1.x; #r1 = eye - vertex, r2 = 1/d(eye, vertex)

MUL R0, R0, R1;

MAD o[TEX2], -R0, c[10], c[10]; #envmap tex coord

DP4 o[TEX3].x, v[0], c[11]; #compute uv for diffuse texture

DP4 o[TEX3].y, v[0], c[12];

END

¶ A Disassembly of the Vertex Programs

The Direct3D and OpenGL versions of the Vertex Program are pretty much the same. Two small differences lie at the beginning, where the Direct3D vertex program begins with an input register declaration that is used to check the validity of the vertex buffer. The other difference is that it needs only one written instruction to multiply a 4-component vector with a 4x4 matrix

m4x4 oPos, v0, c0 // transform vertex in view space

as opposed to the OpenGL implemenation which requires it to be written out completely.

DP4 o[HPOS].x, c[0], v[0]; #transform vertex in view space

DP4 o[HPOS].y, c[1], v[0];

DP4 o[HPOS].z, c[2], v[0];

DP4 o[HPOS].w, c[3], v[0];

In reality, the m4x4 operation of Direct3D's vertex program language is really just a shorthand for the actual 4 dot products that will appear in the assembled bytecode. And as the comment clearly describes, this line, or these four lines, are pretty much all that's needed to transform a vertex to its final position on screen.

The v[0] parameter references the first vector in the vertex buffer, which in this case is the vertex position. The total number of allowed vertex vector input registers is fixed at 16 for all vertex shader versions from 1.0 up to 3.0.

The world view projection matrix is placed in the constant vector registers by the engine's C++ code, and makes use of the 4 registers from c[0] to c[3]. The constant registers are set once before the vertex program is executed, and cannot be changed by the vertex program itself. There are 96 constant registers available in vertex profile 1.x, and at least 256 guaranteed from version 2.0 and upwards (allowing up to 64 matrices to be set). Some graphics cards may support more, and this can be checked by calling the relevant functions of the graphics api.

The result is written into the output register o[HPOS] which contains the 4-component position information, and is eventually sent to the pixel program.

In the next line of the vertex program, the fog value is calculated. The fog register is a special case, as it is one of the only output registers of the vertex program to hold only 1 floating point value, as opposed to the other output registers which hold 4 floating point values. Like all other registers, the usage of this register works the same in both OpenGL and Direct3D.

DP4 o[FOGC], c[4], v[0]; #setup fog

The c[4] constant register is set by the engine and contains a fog coefficient vector derived from the modelview matrix. The dot product of this with the position of the vertex returns the fog coordinate (eye-space depth). On legacy hardware, this value is consumed by the fixed pipeline to apply the fog equation; on ARB-capable hardware, the fragment program can read fragment.fogcoord directly. In vertex profile 3.0 this output register no longer exists, as all the output registers have been collapsed into 12 general purpose output registers.

The two lines which follow calculate the uv coordinates of the bump map providing the smaller details. This uses a temporary register R3 for it's intermediate calculations.

MUL R3, v[0], c[5]; #compute bump 0 uv's

ADD o[TEX0], R3, c[6];

Basically, the position of the vertex is multiplied with the scaling defined by the artist that's setup in c[5], and this value is added together with the texture offset located in constant register c[6] that's calculated by the engine based on the game animation time.

For the large scale detail bump map, the same calculation is used.

MUL R3, v[0], c[7]; #compute bump 1 uv's

ADD o[TEX1], R3, c[8];

Here the scale is in c[7] and the offset is in c[8]. The uv coordinate is written into the o[TEX1] texture coordinate output registers, of which there are 8 in all vertex profiles up to 2.0, and which are part of the 12 general purpose output registers of vertex profile 3.0.

The environment map's texture coordinates make use of the c[9] constant register which is set up by the engine to contain the height of the observer above the water surface, with the other values zeroed out. First the difference between the height of the observer and the position of the vertex in the water is calculated, and placed in the temporary register R0. The dot product of this value with itself is placed in R1, the inverse square root of this value is taken using the RSQ instruction, and multiplied with the negative position of the vertex that was located in R0.

ADD R0, c[9], -v[0]; #r1 = eye - vertex

DP3 R1, R0, R0; #r1 = eye - vertex, r2 = (eye - vertex)^2

RSQ R1, R1.x; #r1 = eye - vertex, r2 = 1/d(eye, vertex)

MUL R0, R0, R1;

MAD o[TEX2], -R0, c[10], c[10]; #envmap tex coord

This gives the negative reflection which is negated, multiplied with and added to the c[10] constant register which contains the (0.5, 0.5) values in order to scale the values from the -1.0 to 1.0 range into a 0.0 to 1.0 value range, which is placed in the o[TEX2] texture coordinate output register.

The last two lines set up the diffuse texture by using some magic values that are located in the c[11] and c[12] registers.

DP4 o[TEX3].x, v[0], c[11]; #compute uv for diffuse texture

DP4 o[TEX3].y, v[0], c[12];

¶ Translation of the Vertex Program to a High Level shading language

Here's the discussed vertex program again, this time in nVidia's Cg language:

void vp_water(

// Per vertex parameters

float4 position : POSITION,

// Vertex program constants

uniform float4x4 cWorldViewProj, // c[0-3] world * view * projection

uniform float4 cFog, // c[4] fog vector

uniform float2 cBump0Scale, // c[5]

uniform float2 cBump0Offset, // c[6]

uniform float2 cBump1Scale, // c[7]

uniform float2 cBump1Offset, // c[8]

uniform float4 cObserverHeight, // c[9]

uniform float4 cDiffuseUvLookup0, // c[11]

uniform float4 cDiffuseUvLookup1, // c[12]

// Output to fragment program

out float4 oPos : POSITION,

out float oFog : FOG,

out float2 oTex0 : TEXCOORD0,

out float2 oTex1 : TEXCOORD1,

out float2 oTex2 : TEXCOORD2,

out float2 oTex3 : TEXCOORD3)

{

oPos = mul(cWorldViewProj, position);

oFog = dot(cFog, position);

oTex0 = (position.xy * cBump0Scale) + cBump0Offset;

oTex1 = (position.xy * cBump1Scale) + cBump1Offset;

float4 negPosition = cObserverHeight - position;

float reflection = dot(negPosition, negPosition);

reflection = rsqrt(reflection);

float4 reflectionRay = negPosition * reflection;

oTex2 = (-reflectionRay.xy * float2(0.5, 0.5)) + float2(0.5, 0.5);

oTex3.x = dot(position, cDiffuseUvLookup0);

oTex3.y = dot(position, cDiffuseUvLookup1);

}

¶ The Fragment Programs

Fragment programs are also commonly called pixel shaders in the Direct3D library. For quick reference, here's the fragment program used for the water in Direct3D:

pixelshader water_ps_2_0 = asm

{

ps_2_0;

dcl t0.xy;

dcl t1.xy;

dcl t2.xy;

dcl t3.xy;

dcl_2d s0;

dcl_2d s1;

dcl_2d s2;

dcl_2d s3;

// read bump map 0

texld r0, t0, s0;

//bias result (include scaling)

mad r0.xy, r0, c0.z, c0;

add r0.xy, r0, t1;

// read bump map 1

texld r0, r0, s1;

// bias result (include scaling)

mad r0.xy, r0, c1.z, c1;

// add envmap coord

add r0.xy, r0, t2;

// read envmap

texld r0, r0, s2;

// read diffuse

texld r1, t3, s3;

mul r0, r0, r1;

mov oC0, r0

};

To save you from some more boring code, I'll leave out the OpenGL one.

¶ Disassembling the Fragment Program

The Direct3D vertex shader assembly starts with some type declarations, stating that it requires the xy values in t0 to t3, which are the texture coordinates interpolated from the values coming from the vertex shader, as well as the four texture samplers located in s0 to s3.

To read a value from a texture at a given uv coordinate, the texld instruction is used.

texld r0, t0, s0;

This reads the color value at t0 in s0 to r0. The first texture was originally given to the engine by the artist as a heightmap, however, the engine turns this into a DsDt texture, containing reflect/refract offsets derived at load time from the original height map, which are used to calculate the texture coordinate offset for the second bump map.

mad r0.xy, r0, c0.z, c0;

add r0.xy, r0, t1;

This is a technique commonly known as Environment Mapped Bump Mapping, or EMBM in short. The mad instruction first multiplies this value with a scaling factor set by the engine, which determines how heavy the bump reflection looks, and then added together with the first two values in this constant which are set to the negative of half this scaling factor, to properly handle the offset that was induced by the scaling. The final offset value is then added to the texture coordinate of the second wave map, to get it's final texture coordinate. This value is then used in the same manner to calculate the offset for the environment map.

texld r0, r0, s1;

mad r0.xy, r0, c1.z, c1;

add r0.xy, r0, t2;

Now that all the necessary values have been calculated, it is simply a matter of reading in the value of the environment and diffuse maps.

// read envmap

texld r0, r0, s2;

// read diffuse

texld r1, t3, s3;

The environment map is multiplied with the diffuse map.

mul r0, r0, r1;

mov oC0, r0

And finally the final colour for this pixel is written into the color output register oC0.

¶ The Fragment Program translated into nVidia Cg

Here's the fragment program again, translated into nVidia's higher level Cg shading language:

void fp_water(

// Per pixel parameters

float2 bump0TexCoord : TEXCOORD0,

float2 bump1TexCoord : TEXCOORD1,

float2 envMapTexCoord : TEXCOORD2,

float2 diffuseTexCoord : TEXCOORD3,

// Fragment program constants

uniform float4 cBump0ScaleBias,

uniform float4 cBump1ScaleBias,

uniform sampler2D bump0Tex : TEX0,

uniform sampler2D bump1Tex : TEX1,

uniform sampler2D envMapTex : TEX2,

uniform sampler2D diffuseTex : TEX3,

// Output color

out float4 oCol : COLOR)

{

float2 bmValue = tex2D(bump0Tex, bump0TexCoord).xy;

bmValue = (bmValue * cBump0ScaleBias.z) + (cBump0ScaleBias.xy);

bmValue = bmValue + bump1TexCoord;

bmValue = tex2D(bump1Tex, bmValue).xy;

bmValue = (bmValue * cBump1ScaleBias.z) + (cBump1ScaleBias.xy);

bmValue = bmValue + envMapTexCoord;

float4 envMap = tex2D(envMapTex, bmValue);

float4 diffuse = tex2D(diffuseTex, diffuseTexCoord);

oCol = envMap * diffuse;

}

¶ Credits

- Jan Boon